Richard Salgado, director of law enforcement and information security at Google, Sean Edgett, acting general counsel at Twitter, and Colin Stretch, general counsel at Facebook, are sworn-in during a Senate Judiciary Subcommittee on Crime and Terrorism hearing titled 'Extremist Content and Russian Disinformation Online' on Capitol Hill, October 31, 2017 in Washington, DC. (Getty)[/caption]

Richard Salgado, director of law enforcement and information security at Google, Sean Edgett, acting general counsel at Twitter, and Colin Stretch, general counsel at Facebook, are sworn-in during a Senate Judiciary Subcommittee on Crime and Terrorism hearing titled 'Extremist Content and Russian Disinformation Online' on Capitol Hill, October 31, 2017 in Washington, DC. (Getty)[/caption]

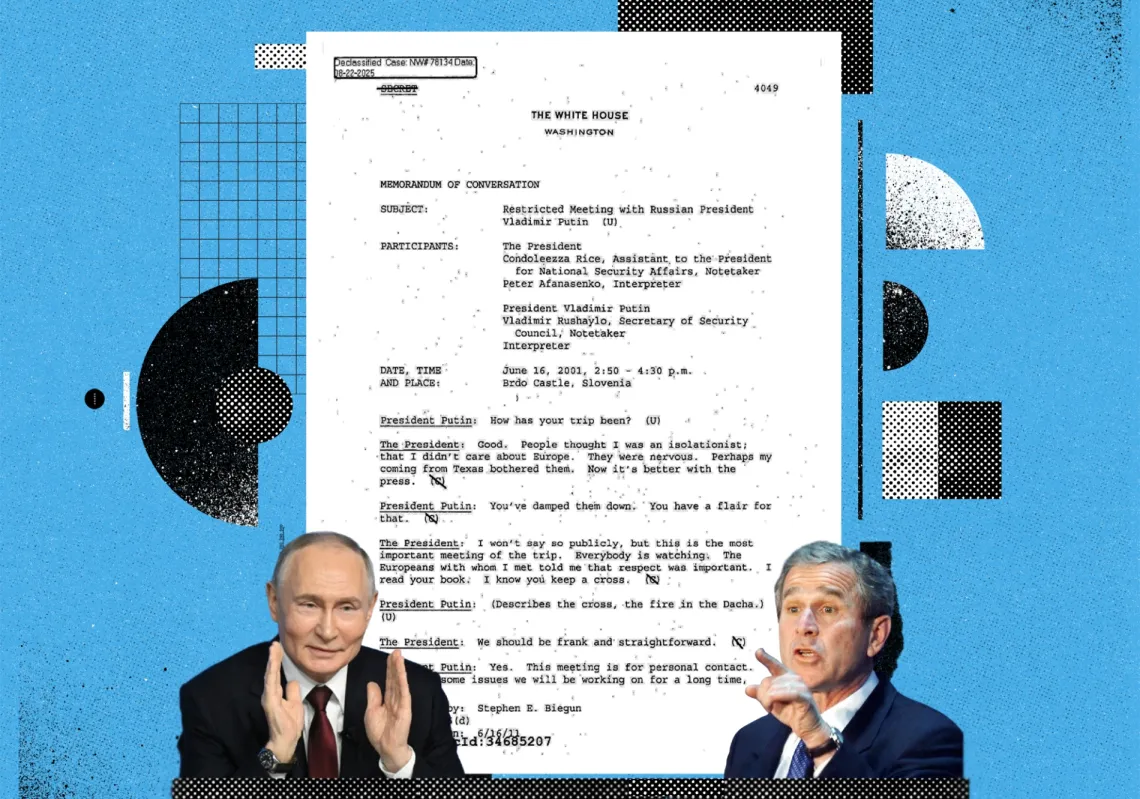

by Jamie M. Fly and Laura Rosenberger

Before the terrorist attacks of September 11, 2001, the idea of turning civilian airplanes into weapons was inconceivable to most. And until it was discovered that Russian President Vladimir Putin had interfered in the 2016 U.S. election, it was unimaginable that social media platforms could be weaponized to undermine U.S. democracy. But unlike the reflective bipartisan process that followed 9/11, the task of addressing U.S. vulnerabilities to Russian meddling remains mired in debates about what actually happened.

Special Counsel Robert Mueller’s indictment of the Internet Research Agency, a Kremlin-linked troll farm, underscores the significant role that social media played in Russia’s efforts to interfere in the 2016 U.S. election. Although this was an important and welcome step, Americans cannot simply look backward. The social media companies whose platforms were exploited must take action to close off the vulnerabilities that Putin and others have exposed and continue to exploit. Russia’s efforts to undermine U.S. democracy, including via social media, continue today. Taking proactive steps to address vulnerabilities that Putin is exploiting is the best way for these platforms to regain the trust of the American people and avoid government overregulation.

THE WEAPONIZATION OF SOCIAL MEDIA

Social media platforms, which are often used for political campaigns and by advertisers, have provided Putin with a powerful weapon to take Cold War KGB propaganda tactics from analog to digital. Attempts to interfere in elections are just one part of a broader strategy to weaken democracies by exploiting divisions, sowing chaos, and casting doubt on institutions.

For many Americans, Russia’s interference in the 2016 presidential election may seem distant and abstract, or just another front in the online battle of ideas. But in reality, Russia’s operations influenced real people to take action offline, unwittingly in service of Russia’s interests of turning Americans against one another. Consider the tale of two rallies in Houston, Texas, on May 21, 2016, where Americans inspired by posts on Facebook showed up to protest outside an Islamic center. Some were drawn by a Facebook group purporting to support Texas secessionism; others thought they were attending a rally organized by a group called United Muslims of America. But attendees did not learn that both groups were fabrications of the Internet Research Agency, designed to pit Americans against one another, until the true nature of the incident emerged over a year later through congressional investigations.

A recent study by Columbia University’s Jonathan Albright of tweets from accounts created by the Internet Research Agency showed that much of its activity involved amplifying and distorting information from mainstream media in order to promote extreme viewpoints and increase polarization. A dashboard that our organization created to track a network of Russian-linked accounts on Twitter has shown similar patterns; this network often amplifies extreme content to attack a range of targets perceived as hostile to the Kremlin’s interests, including both Republicans and Democrats, and to promote extreme views on race, religion, and politics.

Fake personae and pages created by the Internet Research Agency fooled even members of Congress and the media. And although Twitter, Facebook, Google, and YouTube have received the most attention, this is a cross-platform problem, with activity linked to Russia’s operations also identified on Tumblr, Instagram, and Snapchat. Each platform plays a different role in the information ecosystem, and in the activity by an adversarial government to distort the information space by making extreme views seem more prevalent and to increase polarization.

BIG TECH'S DEFENSIVE POSTURE

Preexisting vulnerabilities in U.S. politics and society, particularly hyperpolarization, provided fertile ground for this kind of activity to flourish. But Mueller’s indictment leaves no doubt about the significant role technology and social media platforms have played in Russia’s operation, and therefore the outsized role these companies must play in halting their exploitation to harm U.S. democracy.

Congressional hearings over the past year have compelled Google, Facebook, and Twitter to investigate Russia’s weaponization of their platforms and to make that information publicly available. But both policymakers and the companies themselves have not yet adequately prepared for what may come next.

Under public pressure from Congress, the major social media companies announced initial steps to better detect, halt, and prevent information operations going forward. They have touted efforts to increase transparency of political advertising, improve information vetting, prioritize reliable information, and maintain and protect their platforms against spam, bot, and troll abuse. Some of these reforms have resulted in active steps to improve the quality of their platforms, including banning Russia-funded RT and Sputnik from purchasing ads, requiring all state-funded media to provide a disclaimer, enacting policies to prevent the purchasing of political advertising in foreign currencies, or rewriting search algorithms to downgrade suspect content.

But these efforts vary widely in scope, volume, intensity, transparency, and effectiveness. And all of them have been driven in part by social media companies’ attempt to downplay public criticism and shift attention away from the weaponization of their platforms. Responses to recent congressional questions indicate the companies’ investigations of Russia’s interference remain retrospective and largely only occur when prompted by outside investigators. As a report from the NYU Stern Center stressed, “the Internet platforms must move from a reactive to a proactive approach” to address abuse and misuse. These platforms have an incentive to ensure authentic communication can continue on their platform. Otherwise users will begin to lose trust in the platform as a valuable forum, and advertisers will begin to doubt the efficacy of spending resources on—as major advertiser Unilever announced last week.

NEXT STEPS

The first step to remedy the situation is for tech companies to admit that an ongoing problem exists, accept help from the government and independent researchers, and expose challenges in a timely manner. This should start, as NYU Stern’s report recommends, with “across-the-board internal assessments of the threats … call[ing] on engineering, product, sales, and public policy groups to identify problematic content, as well as the algorithmic and societal pathways by which it is distributed.” This will inevitably raise fundamental questions about the vulnerabilities created by policies and practices of these platforms—but it is essential to have meaningful and open discussions of those challenges in order to address them. Tech companies also need to develop a mechanism to share threat information about foreign interference and coordinate responses with each other.

Rebuilding trust between Silicon Valley and the U.S. government is another key step in addressing the Russian cyberthreat. The Internet Research Agency’s use of Virtual Private Networks (VPNs), U.S.-based servers, and stolen identities of Americans underscores the difficulty in identifying accounts being used for foreign influence. The Internet Research Agency is likely not the only troll network used by Russia—other networks need to be identified and neutralized as well. Yet the lack of trust between Silicon Valley and Washington, which grew in the wake of Edward Snowden’s disclosures about government surveillance and utilization of technology platforms, has led technology companies to resist information sharing with the intelligence community. This needs to change. Both the government and the tech platforms hold information and data necessary to fully identify these actors, but neither can do so alone. The FBI’s new social media and foreign influence task force could become one such vehicle for public-private cooperation, but it needs to be well resourced, involve meaningful two-way information sharing, and be supported from the top on both sides, including U.S. government policymakers.

Greater communication between tech developers and national security professionals is also critical to identifying potential vulnerabilities in new and emerging technologies, such as artificial intelligence, that could be exploited by adversaries. The advent of technology allowing for manipulation of video and audio content will pose a particular challenge and demands real mitigation strategies now before their exploitation is rampant. Some researchers have suggested that the same tools used to alter content can be used to identify altered content—the question is whether it can be identified and removed fast enough to halt its spread. Elon Musk has even argued for regulating robotics and artificial intelligence “like we do food, drugs, aircraft & cars. Public risks require public oversight.”

Social media companies should also increase information sharing with independent researchers such as those at universities or data-centric think tanks, who have played an important role in shining light on Russia’s interference activities on social media. To date, these researchers’ efforts have been limited by the reluctance of many companies to share data with them. But working with a trusted group of researchers, in a manner designed to protect private information about users, is critical to both better inform the work of internal investigators and ensure accountability.

[caption id="attachment_55255741" align="aligncenter" width="4243"]

With examples of Russian-created Facebook pages behind him, Sen. Patrick Leahy (D-VT) questions witnesses during a Senate Judiciary Subcommittee on Crime and Terrorism hearing titled 'Extremist Content and Russian Disinformation Online' on Capitol Hill, October 31, 2017 in Washington, DC. (Getty)[/caption]

With examples of Russian-created Facebook pages behind him, Sen. Patrick Leahy (D-VT) questions witnesses during a Senate Judiciary Subcommittee on Crime and Terrorism hearing titled 'Extremist Content and Russian Disinformation Online' on Capitol Hill, October 31, 2017 in Washington, DC. (Getty)[/caption]

The platforms must utilize both technical and human fixes to ensure they adequately protect their users from manipulation and the expanding use of automated bots for nefarious purposes. Authenticating users and ensuring that every account is connected to a real human being is likely necessary to address Russia’s use of fake personae, accounts, and pages to create and spread content. It’s not enough to make this the policy, which Facebook already does; enforcement is critical but requires not just machines but human review. Facebook is adding staff to address this challenge, but given the scale of information across the platform—let alone other platforms—it is not clear that the problem can be tamed through human review. At the same time, while authenticated by the platforms via secure means, users should be allowed to remain publicly anonymous, provided they are not deceiving others about their true identity, given the importance of such avenues for activists in authoritarian states. The use of deception by anonymous users, however, should be considered a violation of terms of service. Similarly, automation by accounts clearly identified as bots can provide important services, such as Amber Alerts and a bot that alerts the public to earthquakes in San Francisco; it is the use of automation for manipulation that must be addressed.

A majority of Americans now rely on online platforms for news and information—67 percent of Americans get at least some of their news from social media, while 81 percent of Americans get their news somewhere online or through apps. With their algorithms determining what content is delivered into Americans’ feeds, the question is not whether these platforms are publishers but whether they will publish responsibly. Given this reality, social media companies also need to be more transparent with their users about the vast amount of data that is collected on them and which drives those algorithms—a mechanism that the Internet Research Agency had a whole section devoted to gaming. They should be more transparent about their internal policies and processes for trust and safety so that users are better empowered to decide how and where to obtain news and information, as well as how best to control the data that is collected on them.

Researchers have also pointed to broader questions about the targeted online advertising that underpins the business model of these companies. As Dipayan Ghosh and Ben Scott of New America point out, “there is an alignment of interests between advertisers and the platforms. And disinformation operators are typically indistinguishable from any other advertiser. Any viable policy solution must start here.” This question cuts to the heart of the business model of many companies, particularly Google and Facebook, but an honest discussion of this issue is critical to addressing fundamental vulnerabilities that Russia’s operations exploited. Disentangling advertising from data collection and micro-targeting, and moving back to the less individualized advertising we see on TV or in print, is worth discussion.

THE FUTURE OF AMERICAN DEMOCRACY

As we see with the range of steps that these companies have taken with respect to online political advertising alone, the U.S. government has an important role to play in setting uniform standards and closing loopholes that Russia exploited. Congress and the Federal Election Commission should ensure transparency around political advertising online and regulate it in the same way that television, radio, and print advertising is regulated. The bipartisan Honest Ads Act is a narrowly tailored bill that would close some of these loopholes by ensuring transparency on such advertisements and disclosure of their purchasers, but it remains stalled in Congress due to the lack of presidential leadership on the issue.

And Americans themselves need to tackle the demand side of the equation—the societal forces of polarization and erosion of faith in media and institutions that make Americans more vulnerable to interference. This will require long-term efforts to reduce polarization, including overcoming partisanship on questions of Russian interference in the 2016 election; to reverse the fractures in civil discourse; and to halt the corrosion of institutions from within. Americans—including the business community—must accept greater responsibility for their democracy and work to improve media literacy.

Tech platforms still have the potential to serve as democracy accelerators—to liberate and empower people around the globe. But the companies must do the hard work, and fast, to mitigate the downsides and realize that potential. Bill Gates recently stressed that self-regulation and meaningful steps by tech companies to address these challenges is necessary to head off heavy-handed government regulation like in Europe.

Tech companies are not alone in falling behind in addressing this challenge—the U.S. government’s failure to take action in the face of this threat has made Americans vulnerable. But Silicon Valley still has an opportunity to begin to help ensure that Americans are not manipulated by a foreign power when they use their products to engage in political discourse.

This article was originally published on ForeignAffairs.com.