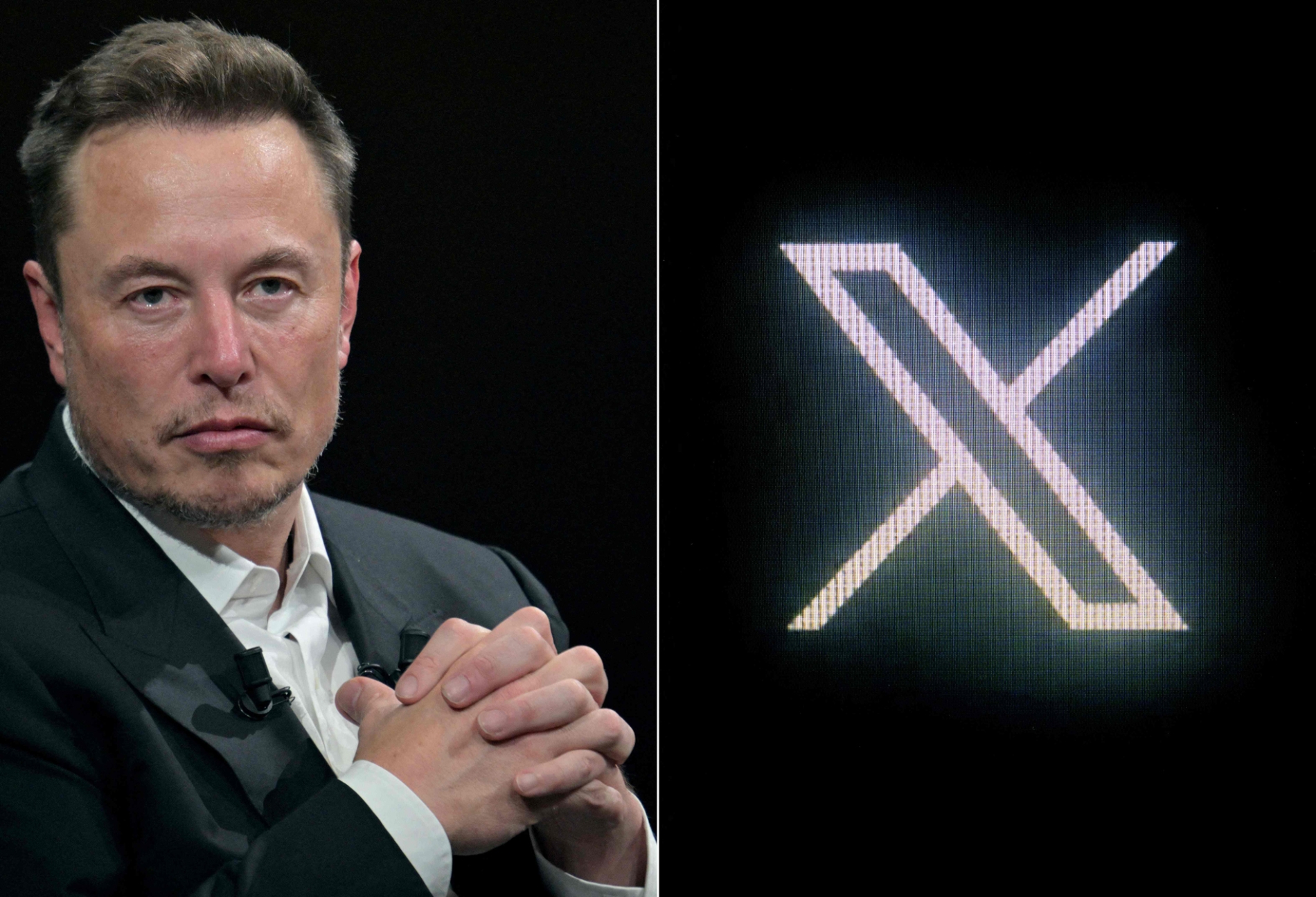

Last month, the billionaire businessman Elon Musk, famed for his SpaceX rockets and Tesla cars, launched a new project produced by artificial intelligence: Grokipedia. Developed by his company xAI, the initiative has been conceived as an AI-driven rival to Wikipedia, which Musk and other conservative activists have long accused of liberal bias.

The rollout of Grokipedia is a landmark moment for the internet, amounting to much more than a clash of two databases in an age defined by a surfeit of information and a shortage of trust. Its launch reveals two competing visions for how human knowledge should be organised in the digital age. Between them lies an unresolved question: who can get closer to the truth—humans or AI?

Wikipedia, an online open-source encyclopaedia written by human contributors, is the largest repository of thought in history. Musk’s challenge to it comes at a time when the idea of truth itself is subject to dispute.

Gatekeeper of knowledge

Two years ago, Musk launched an attack on the Wikimedia Foundation, calling on his followers to stop donating to the non-profit host of Wikipedia until it “regained neutrality.” In one of his sarcastic X (formerly Twitter) posts, he said he would donate $1bn to the foundation if it changed its name to ‘Dickipedia’.

He was shaping a much deeper narrative: the idea that AI might be the only way to restore the concept of truth in an age of bitter dispute, seeking to shift the centre of gravity away from Wikipedia’s community of volunteer writers and editors toward a silent algorithm.

Musk launched the Grok chatbot in late 2023 as an AI-based assistant. From the outset, he began hinting at a more ambitious project that would ‘redefine knowledge’. In March 2024, leaked technical reports revealed that xAI was building a massive database in collaboration with search engines and academic institutions to train a system capable of writing encyclopaedic articles in a neutral style. In July that year, Musk confirmed in a private meeting with the company’s engineers that “Grok will not only answer, but write.”

The manual testing phase of Grokipedia began in August this year and was announced to the public in late October, presented as “the free encyclopaedia without bias.”

Its entries are generated through extensive analysis of open-source data, including scientific reports, government archives, and publicly available academic materials. After the text is produced, it is reviewed by an internal system for consistency and source verification before being published.

Unlike Wikipedia, users cannot edit articles. Their only option is to report errors or provide feedback, which is later integrated into the review process. This closed structure has led critics to describe it as a ‘human-less encyclopaedia,’ while supporters see it as removing ‘human noise’ from the process of knowledge creation.

Only a few hours after the official launch, the new site’s servers went down due to heavy traffic from curious users. Social media was flooded with images of the error page, along with sarcastic comments about ‘the encyclopaedia of neutrality that began with instability.’

Why sourcing matters

Grokipedia contained nearly 900,000 articles at its launch, mostly automated or partially copied from open databases. Users who compared Grokipedia and Wikipedia found linguistic and structural similarities across many entries, leading some to accuse the new platform of copying Wikipedia.

Musk’s team said its algorithm does not copy text but reframes it and uses broader data sources, with any overlap the result of the standardised nature of encyclopaedic writing. But some early articles were almost identical to their Wikipedia counterparts.

Grokipedia’s supporters argued that these similarities were natural in its early stages, since no new encyclopaedia could begin entirely from scratch. Its critics said Grokipedia’s AI depended on the work of Wikipedia’s human volunteers.

However, the central question remains: can AI write with neutrality, and do claims that it can overlook the fact that it relies on the very material that shaped it, including the biases embedded in that work?